Energy Researchers Aim For Holistic Approach to AI Issues

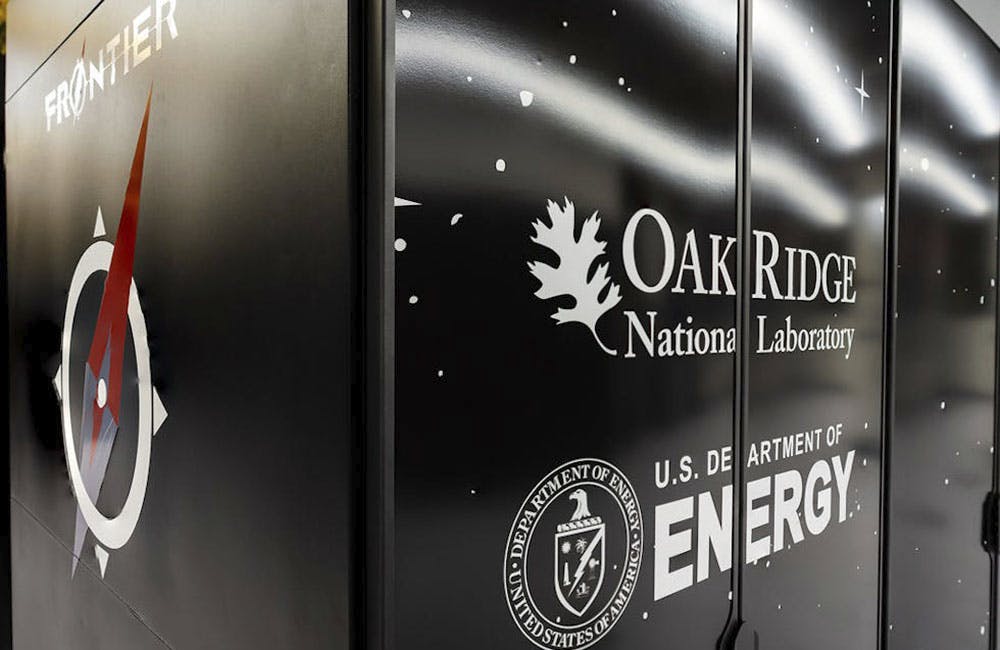

A new center at the Oak Ridge National Laboratory is looking at under-researched areas of AI to better understand how to secure it.

Researchers at the Department of Energy’s Oak Ridge National Laboratory are working to address critical and under-researched cybersecurity challenges in artificial intelligence as agencies prioritize its development across various missions.

“We are working on serious problems that impact humanity and impact national security, and our lab nurtures that kind of thinking, and so we decided to form the center to address the problems that I would say at this point, nobody is truly systematically looking at,” Edmon Bergoli, director of the lab’s Center for AI Security Research (CAISER), said at a Defense Writers Group session last week.

Bergoli and Amir Sadovnik, another research scientist at CAISER, have been studying AI development and its influence in cybersecurity since the center’s 2023 founding. Especially since the success of generative AI tools and language-learning models rely heavily on the data sets that feed them, securing data and ensuring its accuracy are critical.

The researchers noted longstanding misconceptions among the general public seeing AI as something only bad actors use. This misconception, they said, is a reason for better understanding how it’s used in order to counter those threats.

“AI is really good at generalizing over unseen conditions. That’s really kind of a specialty, where most traditional cybersecurity was more [focused on] looking for things it’s already seen,” Sadovnik said. “AI is better at predicting things that it hasn’t seen yet.”

Another concern with AI includes the threat of deepfakes, which have helped spread misinformation and made disproving some AI-generated media rather difficult. Bergoli and Sadovnik expressed concerns with deepfakes, especially in places where media literacy levels are low.

At CAISER, Bergoli and another researcher used an AI program to generate a fake video featuring a fake human using their merged faces and Bergoli’s voice. The video took roughly two hours to make and only cost $10, they said. It would take state-of-the-art technology months and more than $100,000 to debunk the video, according to Bergoli.

This example is also why social scientists should be involved in the study of AI to analyze the effects on everyday people.

“There are a lot of kinds of social questions that I think are more important than the technical ones. Part of what the center is trying to do is kind of bring people from different fields to be able to answer these questions in a more holistic manner,” Sadovnik said.

In light of recent policies aimed at better regulating AI, Bergoli said he’d like to see more regulation over testing AI and increasing maturity levels of red teaming.

“This is a very active area of research,” Sadovnik said. “I don’t think we’re there yet; it’s a relatively new thing. And I think we’re still working on figuring that out, but that’s one of the things we’re doing at CAISER.”

This is a carousel with manually rotating slides. Use Next and Previous buttons to navigate or jump to a slide with the slide dots

-

5 Predictions for AI in Government Technology

Federal agencies are setting plans in motion not only integrate artificial intelligence into their enterprises, but also ensuring the data and algorithms that power these systems are fair and ethical.

David Egts, field CTO for MuleSoft Global Public Sector at Salesforce, breaks down the five predictions he has for AI in 2024. Egts highlights ways government leaders can prepare their agency and workforce to innovate for resilience, augment teams with AI and automate predictive AI to augment generative AI.

-

How Agencies are Upskilling the Workforce in AI

Federal officials are putting in place new training and education methods to ensure its overall workforce understands the technology.

3m read -

Building Better Data Governance Across FDA

The agency is using emerging technology to tackle its data challenges.

19m listen -

A Prepared Workforce is Key to Cyber Resiliency

Strong training strategies and emphasizing cyber hygiene basics enhance security practices at federal agencies.

2m read